Comet for image data problems¶

This example looks at the problem of multiclass image classification with the MNIST dataset.

Image classification is a common task for Neural Networks. Comet comes with a suite of tools built-in to help you debug this type of model. Some of the features you can apply to this problem are:

- Image Logging

- Confusion Matrix

Create an Experiment¶

The first step in tracking our run is to create an Experiment:

import comet_ml

experiment = comet_ml.Experiment(

api_key="<Your API Key>",

project_name="<Your Project Name>"

)

Note

There are alternatives to setting the API key programatically. See more here.

Define parameters¶

Let's define a few training parameters for our model:

# these will all get logged

params = {

"batch_size": 128,

"epochs": 2,

"layer1_type": "Dense",

"layer1_num_nodes": 64,

"layer1_activation": 'relu',

"optimizer": 'adam',

}

experiment.log_parameters(params)

Train the model¶

Since we're using Keras as our framework, Comet automatically logs the configured model parameters, the model graph, and training metrics, without any additional instrumentation code.

Note

You can find out more about Comet's automatic logging features for your preferred machine learning framework in the Integrations section.

model = Sequential()

model.add(Dense(64, activation="relu", input_shape=(784,)))

model.add(Dense(num_classes, activation="softmax"))

# print model.summary() to preserve automatically in `Output` tab

print(model.summary())

model.compile(

loss="categorical_crossentropy", optimizer=params['optimizer'], metrics=["accuracy"]

)

# will log metrics with the prefix 'train_'

model.fit(

x_train,

y_train,

batch_size=params['batch_size'],

epochs=params['epochs'],

verbose=1,

validation_data=(x_test, y_test),

)

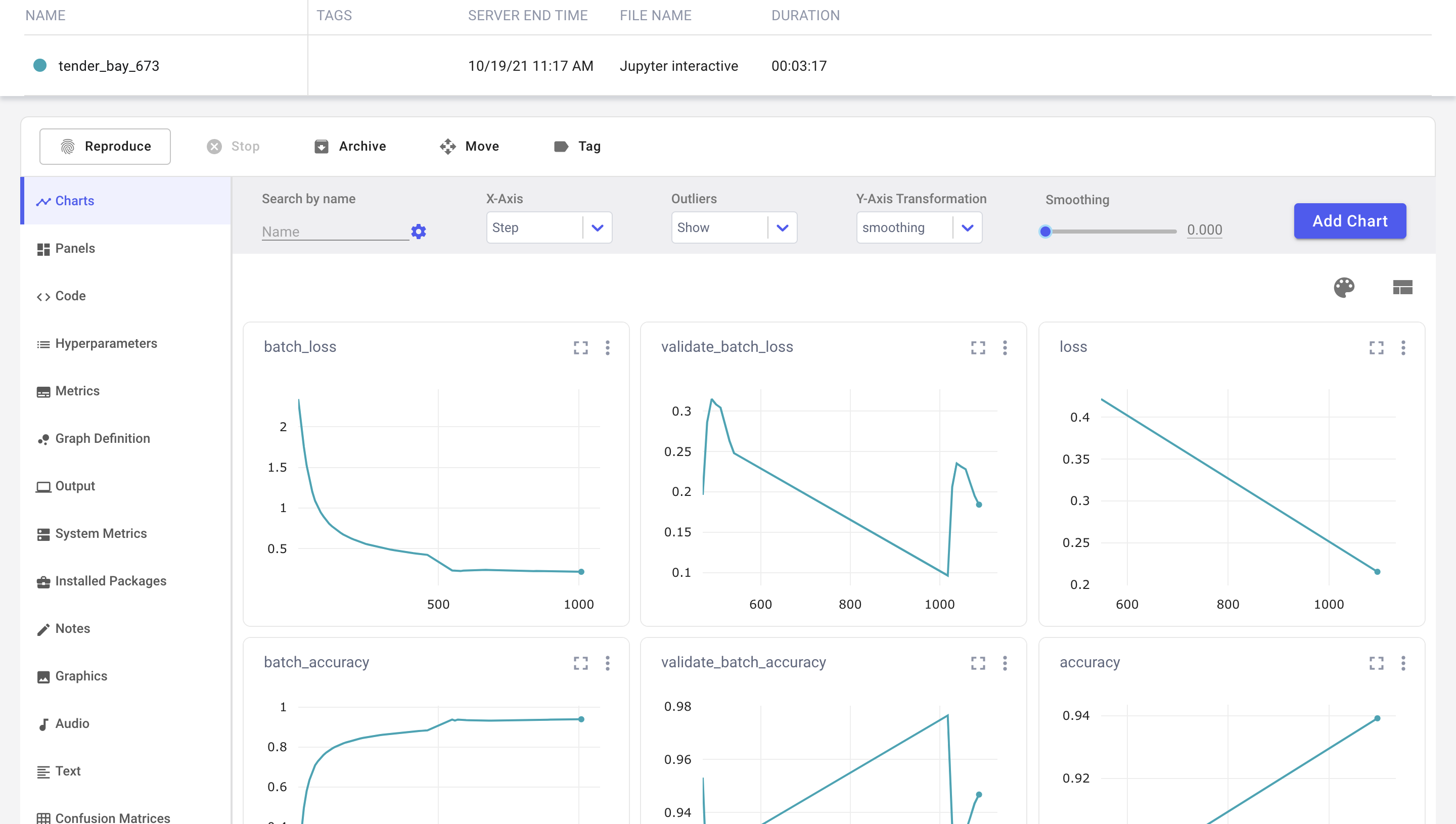

Model training metrics are automatically logged and are visible in the Charts tab in the Experiment view.

Evaluate the model¶

Log evaluation metrics. Log images together with the confusion matrix.

Log evaluation metrics¶

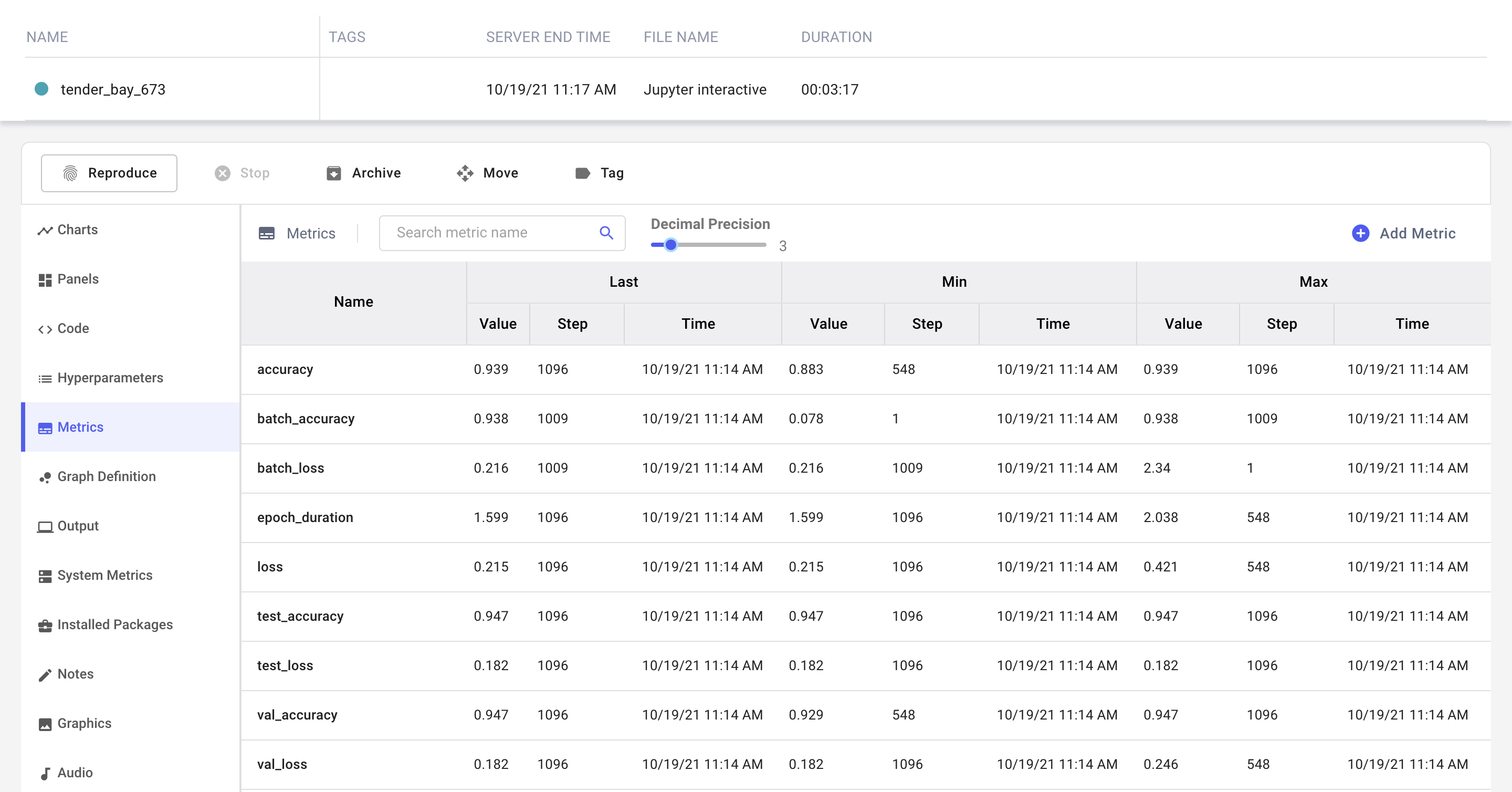

Let's evaluate our model on the test dataset. We can set the Experiment context when logging these evaluations to Comet by adding the appropriate context prefix to our metric names.

loss, accuracy = model.evaluate(x_test, y_test)

metrics = {"loss": loss, "accuracy": accuracy}

with experiment.test():

experiment.log_metrics(metrics)

As you can see, the appropriate context for these metrics has been added as a prefix to the logged metrics.

Log images and the confusion matrix¶

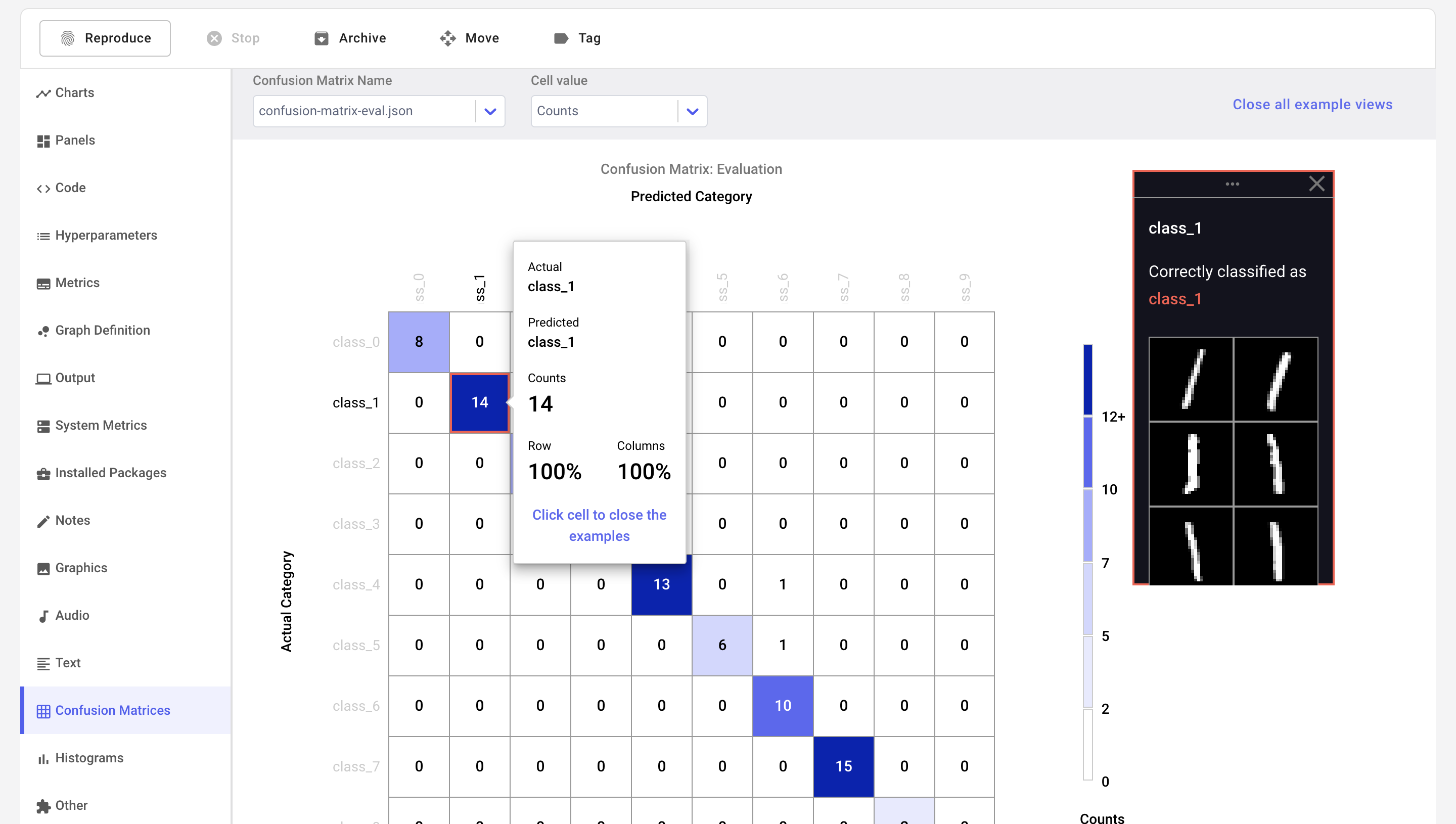

Comet gives you the option to log images with the experiment.log_image method. We're going to use this method along with our Confusion Matrix so that we can log samples from our dataset and identify misclassified images in the UI.

# Logs the image corresponding to the model prediction

experiment.log_confusion_matrix(

y_test,

predictions,

images=x_test,

title="Confusion Matrix: Evaluation",

file_name="confusion-matrix-eval.json",

)

Clicking the off-diagonal cells in the confusion matrix displays a window with information about misclassified examples.

To learn more about how to log confusion matrices to Comet, check out this interactive confusion matrix tutorial.

Try it out!¶

We have prepared a Colab Notebook that you can use to run the example yourself.

More examples¶

Other typical end-to-end examples showcase how Comet is used to handle the challenges presented by structured data and natural language processing (NLP).