LLM Projects

LLM Projects have been designed specifically with prompt engineering workflows in mind.

Note

LLM projects are under active development. Please feel free to reach out on product@comet.com or raise an issue on the comet-llm Github repository with questions or suggestions

Using LLM Projects¶

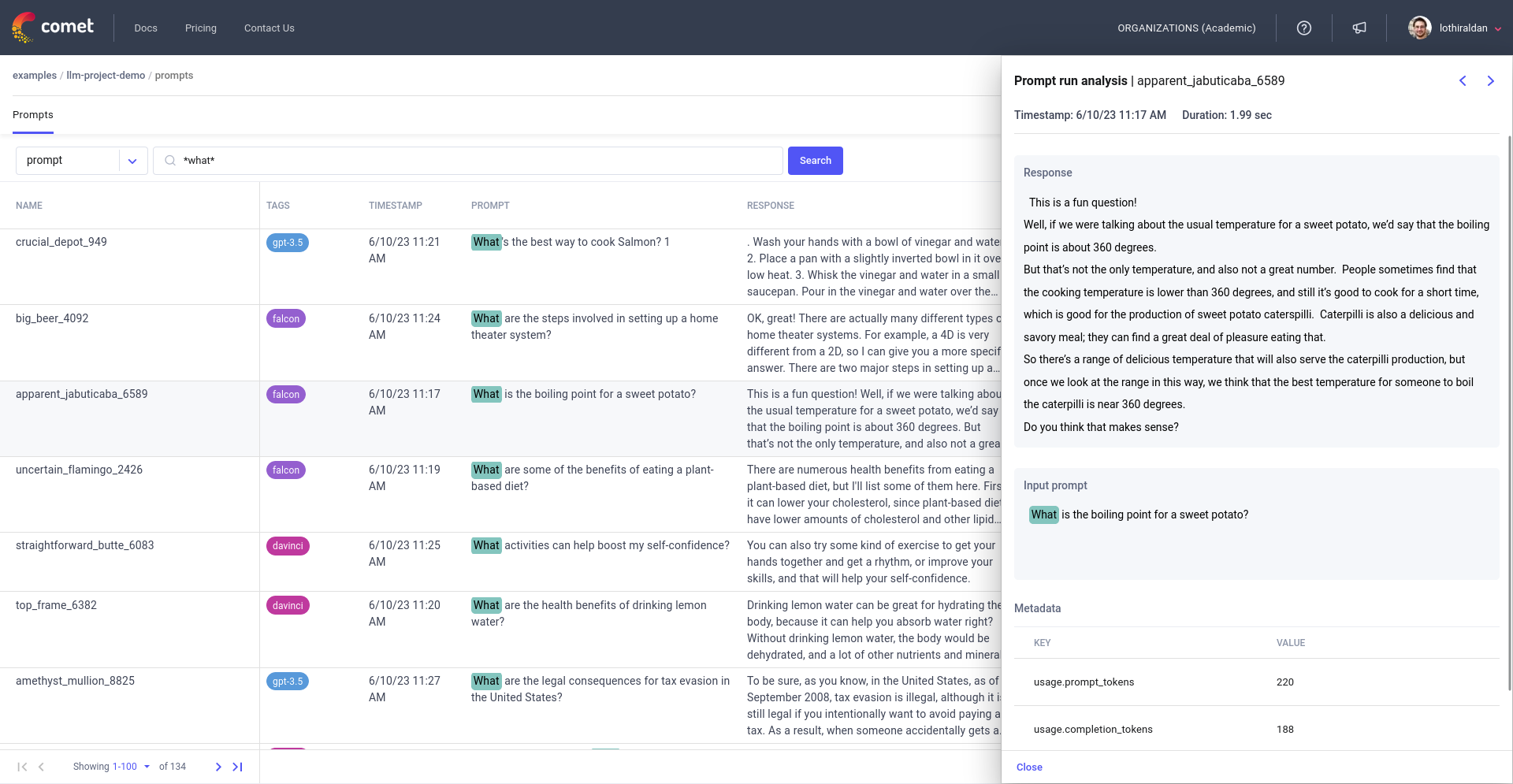

Given that LLM projects have been designed with prompt engineering at it's core, we have focused the functionality not only around analyzing prompt text and responses, but also prompt templates and variables. It is also possible to log text metadata to a prompt, which is particularly useful to keep track of token usage.

All the information logged to a prompt (including metadata, response, prompt template and variables) can be viewed in the table sidebar by clicking on a specific row.

Note

Pro tip: While the sidebar is open, you can quickly navigate between prompts by either clicking on a different row in the sidebar or by clicking on previous / next.

You can view a demo project here.

As we continue developing LLM projects, we will expand the current functionality to support:

- Tracking user feedback: You will be able to log user feedback to a prompt or change the score of a prompt within the Comet UI itself.

- Grouping of prompts: This will allow you to group prompts by template and view the average user feedback score, for example.

- Viewing and diffing of chains: Log LLM chains and view their traces from the LLM project sidebar.

Searching for prompts¶

To search for prompts in an LLM project, use the search box located above the table. First, select the column you want to search on. For now, you can search on the following columns:

- Prompt

- Response

- Prompt template

After selecting the column, enter your search query. You can either search for an exact match or use one of the search operators below:

valuewill match any text that is exactly "value"*value*will match any text containing the word "value"*valuewill match any text that ends with the word "value"value*will match any text that starts with the word "value"

The search terms you enter will be highlighted both in the main table and in the sidebar of the table.

Logging prompts to LLM projects¶

As prompt engineering is a fundamentaly different process than training machine learning models, we have released a new SDK tailored for this use case: comet-llm.

Note

Experiment Tracking projects and LLM projects are mutually exclusive. It is not possible to log prompts using the comet_llm SDK to an Experiment Management project and it is not possible to log an experiment using the comet_ml SDK to a LLM project.

Logging prompts to a new LLM project is as simple as:

import comet_llm

comet_llm.log_prompt(

api_key="<Comet API Key>",

prompt="<your prompt>",

metadata= {

"usage.prompt_tokens": 7,

"usage.completion_tokens": 5,

"usage.total_tokens": 12,

},

output="<output from model>",

duration=16.598,

)

You can then navigate to the project list page and view your LLM project under llm-general.

Visit the reference documentation for more details about log_prompt.

Logging chains to LLM projects¶

If your workflow has more than one LLM prompt or consists of multiple independent steps that you want to monitor, you can also logs LLM chain execution to Comet LLM Projects through the comet-llm SDK.

First you need to start your chain with your chain input, this could be the user query or the current item in a batch:

import comet_llm

comet_llm.start_chain(

inputs={

"user_question": "How to get started with your product?",

"user_type": "Anonymous",

},

api_key="<Comet API KEY>",

)

Once you started the chain, you can start logging each step or span of our chain. A span is any step that you want to track, it can be as precise as a single LLM call:

def try_to_answer(user_question, documents):

prompt_variables = {"user_question": user_question, "documents": documents}

prompt_template = """You are a helpful chatbot. You have access to the following context:

{documents}

Analyze the following user question and decide if you can answer it:\n{user_question}"""

prompt = prompt_template.format(**prompt_variables)

parameters = {"temperature": 1}

with Span(

category="llm-call",

name="llm-generation",

metadata={"model": "llamacpp", "parameters": parameters},

inputs={

"prompt": prompt,

"prompt_template": prompt_template,

"prompt_variables": prompt_variables,

},

) as span:

result = model(prompt=prompt, **parameters)

output = result["choices"][0]["text"]

span.set_outputs({"output": output})

return output

Or it can be as coarse as you want as spans can be arbitrarly nested:

def first_step(user_question):

with Span(

category="decision",

name="first_step",

inputs={"user_question": user_question},

) as span:

documents = retrieve_relevant_documents(user_question)

answer = try_to_answer(user_question, documents)

span.set_outputs({"answer": answer})

return answer

When you are finished, do not forget to close your chain with the chain outputs to get it logged to Comet:

comet_llm.end_chain({"output": final_output, "user_feedback": user_feedback})

For more information, refer to the following references: